Hey everyone, sorry for the extended radio silence - it wasn't out of lack of interest, I promise.

GLoups' speedy modeling work prompted me to look for ways to contribute to the effort in some commensurate manner, but soon after that a seemingly minor favour request landed in my lap, which ended up taking about 20hrs to get out from under, and by that time it was Dec. 30th. I'd already had to set aside a disconcerting number of my typical traditions for the season (yet another instance of "doing holidays wrong") just to find the time to wrap that guff up, so I resolved to pick up no other item on my various lists until some jolly yuletide time was first had

. Finally, on Jan. 3rd I resumed researching, experimenting, editing, troubleshooting and generally putting together an attempt that could be described as "objectively benchmarking Dinora's cliff diff", all of which is detailed right below.

The concept is simple: you make a few changes in a new map edit, and then you're left to wonder whether that actually achieved anything in terms of client-side rendering optimization. Whether some more zones or antiportal actors were added, or some other geometry was retouched, the only way we typically have gone about measuring success there has been by typing "stat fps" in the console and moving the spec cam roughly along the same path between versions, hoping the numbers improved a bit after our editing efforts.

But hold on a sec. Back in UT's older glory days weren't there supposed to've existed some more standardized ways to measure just that kinda performance, with specialized tools and entire communities dedicated to pushing framerates to the highest achievable point? Granted, that scene's long since shifted its attention to more contemporary concerns, but those ways of objectively measuring what mapping arrangements produce better results should still exist, right? If so, why not try and rediscover, maybe even repurpose and use 'em again, see what they might tell us about the fruits of GLoups' labour more specifically, but also if they could form the basis for a reusable GFX performance evaluation methodology?

The first part of that is understanding the components of that old benchmarking practice: On the game dev's own end, Epic made UT games benchmarking-friendly by building into the engine a framerate stats recording feature (accessed via the -benchmark command switch, and helpfully spitting out after you quit the game log reports, framerate averages and detailed CSVs in the game's Benchmark subfolder), but also by making specific maps amenable to standardized tests through "flyby" routes. Related to this, apparently UT2004 was kind of a step backwards in that regard compared to its immediate prequel, and we were left with a pretty small subsection of the game's entire maplist to use for such purposes, as well as with no dev-built tools specifically for benchmarking. Either way, there's also the alternative, but equally reproducible, stress test of recording and playing back demos. As far as benchmarking tools go, the one my searches pointed to as being the most established and comprehensive for the job was UMark, so I'll talk about that further below.

One way to understand how this whole "flyby in an inert map" business works would be to open up in UEd special, non-gameplay, purpose-built stuff, like Mov-UT2-intro.ut2 or TUT-ONS.ut2, and study how it's all put together, but a few "legit" maps like DM-Asbestos could also help shed light into that. I did that, and it involved plenty of copy-pasting SceneManager, InterpolationPoint, UnrealScriptedSequence and other kinds of placed actors relevant to the game's Matinee subsystem from maps to the text editor to see what's in 'em and how all the events, tags and triggers were cross-referenced around. Equally confused and cocky, I then tried to Frankenstein together something similar of my own in Dinora, partly out of whole cloth, partly out of heavily text-edited, repurposed stuff. It was slow, frustrating, often UEd-crashing, and ultimately the stupid way to go about it when

this exists as a very helpful, step-by-step guide to doing it all through UEd's specialized Matinee window.

That one actually produced results quickly, and, as it turns out, all it required was one SceneManager and a chain of InterpolationPoint actors, most of 'em added through said window's buttons - kinda fun too! Taking into account Mov-UT2-intro's neat idea that you can colour your InterpolationPoint actors as if they were [sun]light actors into matching groups for sanity's sake, I painted all mine red (just in case anyone wants to make their own flyby next to mine), and finally got a Dinora flyby working when manually triggering its event ingame through the

causeevent flyby1 console command (make sure you're a spectator or that you've spawned first as a player or it'll get wonky and require quitting/retrying). Getting the same assets copy-pasted across different edits of the same map turned into another headache (this one not self-induced though), but, fortunately, that too proved

easily solvable. In the end, I opted to enable the SceneManager's bLooping property and leave it so in all flyby edits for more extended/stressful testing, but you can very easily disable that in UEd from the actor's properties dialog.

Lastly, all this brings us to this neat package those of you interested in any of this can now grab and test for yourselves:

Dinora_cliff-diff_flybies.rar (15.71MB, 3 Dinora edits with the exact same flyby course added, plus 2 HourDinora assets; live until Feb. 7th or so).

Flying around a good-looking map as Dinora like some kind of disembodied head can be fun (and at times even intentionally reminiscent of

Super Mario 64's end credits

), but what does any of that say about rendering performance progress? Just having the fps counter enabled and trying to remember rough averages between tests wasn't particularly helpful, so I needed something closer to the raw figures. Enter UMark, a pretty nifty n' well-rounded (for its age) third-party tool that can act as a center for various UT200x benchmarking tasks, written by Jeffrey Bakker and released under GPL even before UT2004 itself until its last supported version, v2.0.0 (main site

here, download from

here). I didn't mention UMark being old just for flavour though. Sadly, this fact can get in the way of effortless installation and operation in a number of ways, so let's get those quirks out of the way first.

The installer itself, for one thing, doesn't even permit choosing a custom folder to extract files to, but presumptuously puts everything in C:\UMark instead, so you'll just have to look for it there before you can move it to someplace else (inside UT's Benchmark subfolder seemed the most sensible option to me). As if that weren't enough, for a tool as small as it is, UMark ignores best portability practices like housing its configuration in a nearby .ini file, and instead adds values to the Windows registry to find out where its folder resides, further complicating relocating or deployability. This means that, if you do decide to move its folder, you'll subsequently also need to launch "regedit" from your Windows' Run feature (also works from a command line window) and search for all instances of UMark, changing the path to the right one where applicable. For quick reference, the value that actually matters in getting UMark to run from a relocated folder is that of "Folder" in HKEY_LOCAL_MACHINE\SOFTWARE\Jeffrey Bakker\UMark, but if you care to open .umark documents through the tool (dunno why you'd ever bother), you also need to fix the path in HKEY_CLASSES_ROOT\UMark.Document\shell\open\command. That's installation covered.

Upon running UMark, it'll seem like the tool has correctly identified your UT2004's presence and version, and is now ready to work; do not be fooled by this. Immediately close it, go to your UT's System subfolder, select your UT2004.ini and User.ini, copy 'em to clipboard, then go to UMark's Data subfolder, paste 'em in there and rename 'em to something like "myUT2004.ini" and "myUser.ini"; apart from always being a good plan to have a backup of both those files for standard reasons (they can get corrupted under freakish conditions), you do NOT want any external tool to be directly accessing them, so a copy for benchmarking reference should do. Even though you'll be pointing to those two in the tool, there's one other important change you need to do while in the Data subdir: open UMarkPerformance.ini and UMarkQuality.ini, find the "FirstRun" property halfway through each one's first page, and change the value in both to 3369, otherwise you'll waste time further configuring the specifics of a benchmark, only to launch it and see a dialog pop up saying you have an invalid CD key instead.

There's a couple more things to note before launching a benchmark, so I'll cover them in random order here. Much like the game itself, the tool is old and therefore couldn't account for all the possible screen resolutions people use. Much like the game itself, there's easy workarounds for this, so just pick one from the resolution tickboxes list on the left of the program that's close enough to your actual one and the fix will be applied elsewhere; just keep in mind for now that this is not a "radio button" choice where only one can be made at a time, so accidentally ticking two or more resolutions will multiply the total benchmark tests by a factor equal to the number of different resolutions (say, 3 maps listed and 2 resos will make for a total of 6 successive launches of UT2004 for GFX performance recording). The tool can measure performance in any one of three methods; flyby, demo playback, or AI bot match, with the last one being obviously the most prone to variance across tests; but there's no immediate way for a novice to tell which one's selected. Setting the Bots dropdown value to 0 and keeping the Timedemo box unticked is how you pick the flyby tests. Now, remember how I mentioned this odd program doesn't use an .ini to store your settings? That means you're gonna have to keep selecting and feeding it your two "Custom Ini File and User Ini File" choices every single damn time you run it. Fun. Anyway, do that, then you can move on to clicking the thin, vertical > button to expand the maplist section.

Note here that you'll only be able to add maps to the list if they've already been placed in the game's Maps subfolder and seen there by UMark upon its launch, regardless of any additional maps folders you might've also specified in your UT2004.ini (did I mention it's old n' quirky?). You can add a bunch of random maps in the list just to attempt to save it and learn that it's a plain old .txt file with a single full-filename list per line; making your own list, then, with just the maps you want to run and loading that is the simplest way I've found to streamline things from there. Lastly, remember that setting your proper screen resolution was a pending task, so now that you've selected the type of benchmark you want to do (flyby, presumably), go to UMark's Data subfolder, open the relevant .txt that is to be executed upon game launch (UMarkFly.txt), create a new first line, type in

setres 1280x768x32 or whatever width, height and colour depth fits your setup, and, finally in the case of these Dinora flyby maps, adjust the caused event from "flyby" to "flyby1" right below, otherwise nothing will actually be triggered once the game starts.

Once you press the "Benchmark!" button, what UMark essentially does is launch the game a number of times, each one with a customized set of command line arguments reflecting your settings. In my case, that looks like

D:\UT2004\System\UT2004.exe ONS-Dinora-32p-a5_flyby.ut2?game=engine.gameinfo -benchmark -seconds=77 -nosound exec="D:\UT2004\Benchmark\UMark\Data\UMarkFly.txt" ini="D:\UT2004\Benchmark\UMark\Data\myUT2004.ini" userini="D:\UT2004\Benchmark\UMark\Data\myUT2004.ini" -1280x1024.

You might think that any of that is extraneous or be tempted to just do so without even using the program in the hopes of simplifying things, but do keep in mind the following: Without the engine.gameinfo part, you're liable to spawn in there and ruin the benchmark instead of the event getting triggered itself. By changing the -seconds switch, you can obviously arbitrarily change the duration of each test, even though for UMark it's hardcoded to 77 seconds (I think) for some odd reason. Without the executed file's command list and the correct flyby event that all happen the instant the game loads, you would need to trigger the event yourself once ingame. Without the command list's apparently invalid "ship" line (it's not an actual UT2004 command), UMark won't know to capture the stats, so you'll just have to sift through the produced results in the other Benchmark subdirs on your own.

A different kind of fair warning is also merited here: it seems like the game's built-in benchmarking mode/CLI-switch doesn't really care about minor human conveniences like observing a "normal" tickrate (or preventing nausea, for that matter) and just speeds through the whole thing as fast as your hardware can go, as if, say

slomo 9 or something had been typed into the ingame console, so brace yourselves for a pretty quick flyby (it was ~7x normal speed for me). If you want it done at a proper, leisurely pace, you'll need to fire up a normal offline game, and, in the way mentioned above, manually cause the event via the causeevent command.

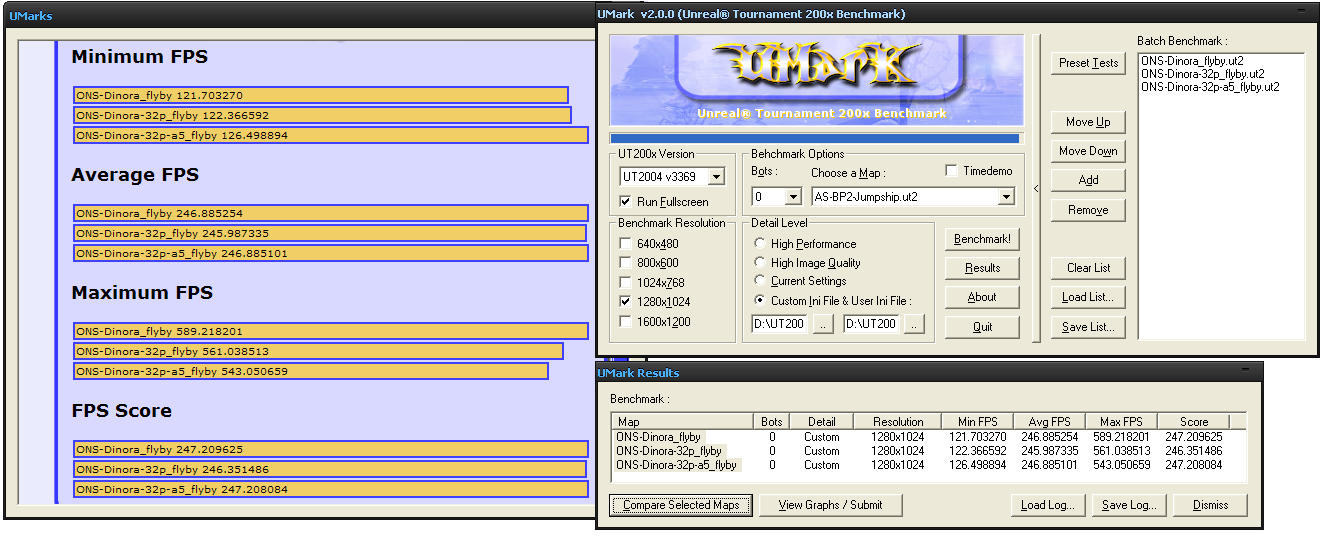

Finally, somewhere 'round this point the exciting buildup of this trip must end with the rather unfortunate reality of the benchmark results themselves. See, I ran the entire set of 3 maps through a number of benchmarks via UMark, and then again using custom durations of my own (154secs) and normal fly speed, but in all cases the min/avg/max framerate results I got were not what I'd expected to see, given my understanding of rendering performance and map editing experience over the past decade or so. If anything, the rendering performance difference between the old, "solid cliff st.mesh" edits and the "segmented in multiple st.mesh strips" arrangement of the new one was so small as to be unindicative of any significant improvement. Take a look at one such case:

- Dinora-comparative-benchmark-flybies-fps-UMark-scores.png (120.75 KiB) Viewed 9405 times

I'm not sure what to attribute this to. Perhaps the CullDistanceVolume might've played some detrimental role to graphics calculations comparable to the presumed gain from the stripped st.meshes, but if that assumption is also wrong, I'm just out of ideas here. Not to mention, if gains aren't visible in the sterile context of no ingame activity, things would be even less perceptible when all sorts of other gametype-relevant actors would be active n' consuming parts of the rendering budget during a match, thus drowning the "signal" in much more "noise".

The bottom line here is that, after repeated tests that pointed to this same state of affairs, I'm both baffled and saddened to conclude that it seems I've wasted GLoups' time, apparently for no meaningful gain. I'm sorry, man, guess I was wrong about this :/.